Acoustic Learning,

Inc.

Absolute Pitch research, ear training and more

There seem to be three aspects of learning absolute pitch: chroma isolation, categorical perception, and structural decoding. If all three of these can be taught, it may result in true, musical absolute pitch.

1. Chroma isolation is required to make an absolute judgment of musical frequency.

This ability seems to be what's lost after the supposed critical period. When a child is naive about what he's meant to be listening for and how he's expected to interpret music, using absolute chroma to interpret musical sound could be no more difficult or peculiar than listening for traditional tonal relationships. An adult has learned to integrate and ignore chroma to make relative pitch judgments.

Absolute Pitch Blaster, by a perceptual differentiation strategy, teaches chroma isolation. One down, two to go.

2. Categorical perception is necessary for chroma to be recognized by its functional identity, not by its relative or subjective characteristics.

Chroma isolation does not lead directly to categorical perception. Naming chroma accurately is not an absolute-pitch judgment if the judgment is made based on perceived sensory qualities such as lowness or highness, descriptive adjectives, emotional effect, or similarities to other chroma. As with visual color or language phonemes, objective identity is perceived and labeled first; qualitative and subjective judgments are generally made upon reflection.

The most exact model for categorical chroma seems to be color learning. It might be possible that colors are learned by mere repetition of exposure to name-color pairs, but that would provide no explanation of why a child learns colors categorically, rather than "higher" or "lower" on a spectrum. Categorical perception must be induced by the tasks for which colors are used-- and colors are typically used for color coding. Red traffic light means stop, green means go. Differently-colored apples promise different tastes. Identical objects convey different meanings because of their color; and, because we recognize objects by learning their distinctive features, learning to recognize these objects requires learning to identify color.

To solve the problem, I will want to create tasks that require a learner to regard different chromas as functionally distinct. Tasks must be invented for which A-sharp means one thing while D means another. The task might be something as simple as labeling objects with different icons like Chordhopper already does, but a kitchen-sink approach may be necessary to induce categorical perception-- perhaps using microtones, octaves, timbres, tones, chords, key signatures, intensities, and more, so that chroma is a definitive feature of each object but offers its own peculiar representation of the category.

3. Structural decoding is required to give musical value to absolute perception.

It is no more effective (or possible) to listen for individual notes in music than to listen for individual letters in language. However, individual letters are learned from exposure to them in language. Once you're aware that the letter P exists, you're going to learn it more readily by hearing Peter Piper picked a peck of pickled peppers than you are by comparing pig and big. If you compare big and pig, perhaps you would learn that P is not B, and you might even become able to recognize a P versus a B, but hearing the Peter Piper sentence you'd only be able to tell that there are some P's in there somewhere. You wouldn't know where they were, or what function they served, or how to write out the sentence.

This will require nothing less than constant decoding and encoding of musical structures at all levels, from interval to sonata. I'll have to tackle this last because I don't yet know what the structures are myself. I also don't know whether it's possible for a computer program to easily generate an infinite number of legal melodies and phrases even with substantial data entry, but if there's a way to do it off-line or with MIDI instrument coaching I'll find it.

Object categories are generally formed by the cognitive act of sorting and labeling. So why, for 109 years, has every attempt to identify and label musical tones consistently failed to produce absolute categorical perception?

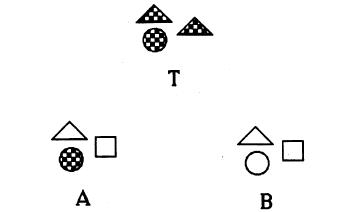

A possible explanation presents itself in this crazy little example from Rob Goldstone (and others). In this image, between A and B, which is more similar to T?

Now the fun part: again between A and B, which is more different than T?

This isn't a trick to fake you out; it's an honest example of how our minds work. The authors' explanation is that when we judge similarity we tend to look for relationships, but when we judge differences we tend to look for attributes. That's why T and B are more similar ("same shading scheme") but also more different ("does not display any checkered pattern"). This observation alone, if it is generally true, could explain why no method has ever taught absolute pitch.

Every perfect-pitch training system that's ever existed has operated just like this image. To train a student, the method offers an example tone T and asks students to identify unknown tones A, B, C, et cetera; in essence asking "is T the same as A or B?" But in judging similarity, a student will be paying attention to relationships, not attributes-- and that's relative pitch. An absolute pitch, by definition, has no relationship. Chroma is a single vibratory frequency; there is no second attribute for chroma to have a relationship to. If this image demonstrates the way our minds work, then any perfect-pitch instruction system may be, paradoxically, automatically doomed to fail because it asks you to name notes.

Deliberate categorization training does work, but it works for objects that have multiple characteristics. The categories can be arbitrary, unconscious, and practically indescribable (as with human faces) and still be trained effectively. But if categories are based on a single dimension, like width or length, categorical training will merely improve your ability to detect fine differences. Although you may become able to estimate magnitudes with good precision, you don't actually start making reliable categorical judgments.

Ordinary color perception shows, however, that learning categorical perception for a single attribute is not impossible. Furthermore, research has shown that color categories are highly dependent on the words we learn to name them. So there must be some process by which colors are learned, and mimicking that process should make it possible to learn pitch categories.

There is a common-sense explanation for how we learn colors as a child: adults point at them and for each one tell us that's x. But this can't be enough. A fellow named Willard Quine, quoted here from How Children Learn the Meanings of Words, illustrates the problem.

[I]magine a linguist visiting a culture with a language that bears no resemblance to our own and trying to learn some words: A rabbit scurries by, the native says gavagai, and the linguist notes down the sentence 'Rabbit' (or 'Lo, a rabbit'), as tentative translation, subject to testing in further cases. ...[I]t is impossible for the linguist to ever be certain such a translation is right. There is an infinity of logically possible meanings for gavagai. It could refer to rabbits, but it could also refer to the specific rabbit named by the native, or any mammal, or any animal, or any object. It could refer to the top half of the rabbit, or its outer surface, or rabbits but only those that are scurrying; it could refer to scurrying itself, or to white, or to furriness.

The process of color learning can't be merely learning a color-name and associating it with a sensory stimulus. Nor can it be the simple detection of different qualities of color; it is possible to recognize that wagons, hydrants, stop signs, etc, all share a similar visual attribute ("red") while lemons, canaries, dandelions, etc, share a different version of the same attribute ("yellow")-- even to the point of being able to abstract, detect, and describe the "color" attribute-- and still not be able to recognize, remember, or name colors. Differentiation processes are the necessary start of the overall learning task (which is why Absolute Pitch Blaster is still going to be important) but once you are able to reliably detect a "color" attribute, you start making similarity judgments to name them. What relationship allows you to recognize the similarities between same-category colors of different brightness, hue, and saturation? The only category-relevant information in the sensory input is the frequency, and that has no internal relationship to anything but itself.

In short, I find it unlikely that color or pitch categories are formed by perceptual discrimination and differentiation alone. The general effects of language labels on categorical perception make me suspect that this is an issue of concept formation. What's traditionally been done in attempts to train absolute pitch is to present you with a sensory input (a sound vibration) and try to make you remember it somehow. But what if the necessary process is to form an independent concept and then match that concept with an available stimulus? So rather than seeing a bajillion different types of green colors and (eventually) figuring out that these are all "green", what if the color-category concept of "green" were learned, and all subsequent input served to reinforce and expand that already-existing intellectual concept?

That is-- to gain categorical absolute pitch, maybe we don't need to learn how to name notes. Maybe we need to learn, conceptually, what the names mean.

The questions I've been getting lately made me realize that I kicked off "Phase 17" without fully stating the current state of affairs.To date, still, no adult has been documented to have learned absolute pitch. Not one. Although anyone can easily learn to name notes by any method, I've asserted that none of this is "true" absolute pitch ability-- and now we have some scientific data to show it.

This year, some folks in Melbourne finally applied a brain scanner to the question, well, what about those people who have learned to name notes very well? The answer is clear: they're not using the same areas of their brain. Even when a musician identifies tones with as much as 85% accuracy, and even when their reaction times are as fast or faster than "real" absolute musicians, they still don't think of pitch the same way. Literally. Their brains paint an entirely different neural picture. Don't take my word for it, but read it yourself, and draw your own conclusions; nonetheless, the upshot (and the bottom line) certainly seems to be that even highly skilled note-naming ability is not necessarily the genuine article. It is very likely to be, as the authors classify, quasi-absolute pitch.

I'm pleased to see, too, that if I'm reading this correctly there's one case that can be confidently closed: using a "reference tone" to make absolute judgments is not absolute pitch. It's a right-brain activity that, neurologically, does not resemble actual absolute judgment. That's what their scans show. So you can learn reference-tone note-naming, and you might get pretty doggone good at it, and maybe it'll help your musicianship somehow, but it is demonstrably not absolute pitch.

It may not seem like a surprise that absolute musicians think differently about music-- it probably seems almost common sense-- but their new finding appears to support the revelation that Absolute Pitch Blaster (now Absolute Pitch Avenue) has uncovered, and that's this:

Absolute pitch ability is not the ability to hear tone chroma.

Now that was a surprise. Until Absolute Pitch Blaster/Avenue came along, tone chroma seemed to be the holy grail. Certainly, the advertisements we've all been subjected to champion the idea: if you can hear tone chroma, you can have absolute pitch-- and once you hear tone chroma, that is absolute pitch! The scientific literature seemed to believe it, too, if only implicitly, in the design of their experiments and the conclusions they drew from their experiments. But it's not true.

Absolute Pitch Blaster/Avenue does what no "method" has accomplished before: it can teach anyone to hear chroma. Commercial "methods" which have indeed urged their customers to listen for chroma have been unsystematic collections of hints and guesswork. They have always seemed to be methodical because they've contained plenty of "exercises", but you can do the exercises only if you already know what chroma is. The exercises are not designed to teach chroma. It is possible that someone has learned to hear chroma before now, but it was impossible to be sure of it; we've known since at least 1965 (Korpel) that chroma is the fundamental frequency of a tone, and no "method" of perfect pitch instruction existed that systematically, scientifically, objectively trained a person to perceive the fundamental frequency of a tone. We could only guess at what had truly been learned.

So I was thrilled when I determined that Absolute Pitch Blaster/Avenue can indeed teach anyone, even a completely untrained non-musician, to hear the fundamental frequency of a tone. (Which is, again, why I applied for a patent.) And if this was tone chroma, then the skilled musicians making such rapid progress were clearly learning what was surely tone chroma, and so was I...

...and it wasn't working. Any one of us could hear tone chroma ringing through, clear as clear can be; but even with a melody-reference, it became obvious that we were none of us absolutely certain about which chroma we were hearing. Many of us were making very close estimates, but I agree with Evelyn Fletcher; I reject the idea of "within a semitone" as an accurate guess.

So that's what "Phase 17" is about. It's possible to learn to hear tone chroma; thanks to Absolute Pitch Blaster/Avenue, it's easy for anyone to learn to hear tone chroma. But if learning to hear tone chroma does not lead directly to absolute pitch judgment... well, then, what does?

"Categories are categories of things. Since we understand the world not only in terms of individual things but also in terms of categories of things, we tend to attribute a real existence to those categories. We have categories for biological species, physical substances, artifacts, colors, kinsmen, and emotions, and even categories of sentences, words, and meanings. We have categories for everything we can think about."

So wrote George Lakoff in his book about categorization theory entitled: Women, Fire, and Dangerous Things. The title is, of course, a clever illustration of his book's main point. (Here's a somewhat lengthier analysis of the entire book.)

Categories are formed around prototypes-- but, asks Lakoff, what is a prototype? Traditionally, a categorical prototype has been assumed to be an ideal physical representative of its category, and categorical membership based on degree-of-similarity to the prototype. Lakoff, however, argues that a prototype is not a physical exemplar, but a mental structure, and categorical membership is based on goodness-of-fit to this structure. For example, women, fire, and dangerous things may all belong to a single category, but such a category can't possibly have an ideal physical member; instead, you can easily assess how well something fits into this category because you possess a concept-structure of "harmful things." A category's "prototype" is an ideal version of its members' shared characteristics-- but its definitive characteristics may be physical or imagined, literal or learned, and expressed in an infinite variety of ways.

It may be, therefore, that categorical perception is formed around a concept, not a sample. This is obviously true for the imaginary "dangerous things" category, but less obviously true for a physical category like "major third". People often argue about which is a better prototype-- equal temperament or just tuning-- and this certainly seems to be a quibble about modeling a physical prototype. Whether you define a "major third" as the ratio of 5/4 or 635/504, an ideal of that ratio clearly exists in reality, and it's common practice, if not common sense, to evaluate major-thirdness by comparing a sound to this physical prototype. However, the physical composition of a musical sound is not a prototype, even if its ratio is mathematically exact. The prototype of a musical interval is a mental structure.

To begin with, any musical sound contains information relevant to categories other than interval. Timbre, intensity, duration, attack and decay, metric position (such as "downbeat"), and location in space are all present, and imply identities other than an interval; subjective but legitimate categories like "well-played" or "gently sung" can also apply. No existing musical sound can possibly serve as a "major third" prototype because it must simultaneously be many other things as well.

More compellingly, the physical identity of "major third" is not consistent. There are at least three types of major third: the major-third scale degree, a major-third harmony, and a major-third interval. Each of these perfectly matches the imagined prototype of "major third", but none of them are physically identical. It seems improbable that an expert musician has memorized and internalized an ideal exemplar of each of these three different physical types; it seems more likely that they have learned to abstract the major-thirdness of them all.

This would mean that when you're training your musical ear, you don't want to learn and memorize prototypes. You don't want to train yourself to recognize new sounds only by comparing them to an ideal sample you have stuck in your head. It isn't a matter of mere efficiency; rather, it's the difference between learning the functional, meaningful, and desirable musical structure of major third versus the useless, unimportant, and isolated non-musical group of "sounds which resemble this other one I can remember."

How do we develop categorical judgment? I asked Rob Goldstone about it, and his personal answer was similar to his published answer: we don't know.

"We do not know... the precise course along which this ability develops: what triggers it; whether internal mechanisms or external cues are more powerful in shaping it; how concepts are 'born' in our heads; or how do we end up, as adults, to flexibly shift between several possible interpretations for one stimulus in accordance with contextual demands." (Goldstone, 2007)

Nonetheless, as Lakoff's book illustrates, the newest data seem at least to be killing the theory of the physical prototype. Goldstone continues:

"[R]elatively recently we cognitive scientists have changed our view about categorization. We have moved from considering taxonomies (or categories based in logic) as the 'real,' mature kind of categorization to understanding that there are multiple kinds of similarities that are taken into account when one groups items."

If this is true, then it shoots a massive hole in the fundamental premise of much ear training-- that learning musical sounds means learning what they sound like. The full identity of any object, even a musical sound, goes well beyond its physical attributes, encompassing every interpretation that can be imagined for it.

Here is a question that you should answer before reading any further. Don't overthink it; it's not meant to be a trick question. Just say (or write down) the first few things that come to your mind. If you answer this sincerely and easily, you'll have some context for what comes afterward.

What is an E-flat?

Got your answer? Okay... moving on.

We don't perceive objects by their physical appearances. We perceive objects by what we know about them. When we haven't learned anything about an object, naturally all we can know is what it looks like or sounds like; but once we do learn something, it becomes an inextricable part of what we "see". How many times have you heard, or said, after learning some new fact, "I'll never look at x the same way again"? This isn't just a figure of speech; it reflects the fact that our perception of object x has fundamentally changed.

An article by French researchers explains the difference. If some Object X is best recognized by its physical features, then it is a natural object. If Object X is best recognized by its functional purpose, then it is an artifact. These researchers discovered that they could easily figure out which was which by asking children the simple question, "What is Object X?" Perceptual answers indicated a natural object; functional answers indicated an artifact. I can easily show you what I mean-- For each of the following objects, notice that your first instinct for some of them will be to say what it is (and what you can directly observe) and for others will be to say what it does (and what you know about its purpose).

What is water?

What is soap?

What is a tree?

What is

a computer?

What is a cow?

What is a ruler?

For some of these, you might have given answers that were not about physical appearance or composition, but were still natural observations (like "a cow moos and eats grass"). It is true that you can make functional observations of natural objects; you could say that a cow can be made into leather, or into burgers. However, you probably have to consciously think about it before you come up with answers like that-- unless you happen to be a tanner or a butcher, in which case, to you, a cow is a functional artifact first and foremost. To these professions, what a prototypical cow looks like, and how it behaves, is irrelevant. Conversely, you can make natural observations of an artifact like a computer (weight, length and width, surface temperature), but they're not what you'll think of first, and they don't really tell you anything meaningful-- unless you happen to be mounting it in a cabinet, at which point its physical characteristics become crucial.

In short, an object's primary classification rests entirely on which of its definitive features are most meaningful to you, the observer, at the moment you need to categorize it. If, like the computer that needs mounting, you interact with an object in a way that renders its function irrelevant, you will become very aware of its natural characteristics. If, like the butcher and his cow, you interact with a natural object in a way that trains you to make use of it, then you will no longer perceive it primarily as a natural object, but as an artifact. In fact, with increased functional knowledge, you can come to perceive familiar objects as completely different where once they seemed practically identical-- and this total transformation happens without the slightest change to the image your senses receive.

The French research shows how this process can be trained. They wanted to find out if children could be made to recognize the same objects as either natural objects or artifacts. Indeed, their experiments showed that "...training the children to look for common visual properties helped them to categorize natural objects at the superordinate level, whereas asking them to seek common functions improved their categorization of artifacts" (Bontheaux, 2007).

So, going back to that other question. What is E-flat?

Did you describe what it sounds/feels like (a natural object) or what you use it for (an artifact)? And if you described it as an artifact-- as you may have done, if you've had an amount of practical musical experience-- ask yourself, were you thinking as you described it of the physical sensation that lives in the air, or of the theoretical representation that sits silently on a page? Try either one; my guess is that if you describe the sound in the air, you'll talk about it as a natural object, and if you have the experience to describe the dot on the page, you'll talk about the artifact. (Whether that is or isn't the case, here's a thread to talk about it.)

If you've followed this far, you probably see where I'm going with this. It may be that absolute categorical perception of auditory pitch may be induced by drawing attention away from its physical characteristics-- or anything about it that can be directly observed-- and emphasizing its functional purposes instead.

The most obvious problem, of course, is figuring out how to describe the functional purposes of an object that has long been argued to have no functional purpose.

At this point my theory of how absolute pitch is learned seems essentially complete. The next step is not theory, but action. It's not quite do or die-- there might be some tremendously significant factor I'm overlooking-- but I can see now a complete process, from apparent beginning to logical end, and there's little more I need do except create a training system that represents that process.

The critical premise: musical pitches are learned in the same manner as language phonemes. If someone undergoes the same process as that for learning phonemes, while substituting musical structures for language structures, then absolute pitch should be learned.

Why has no one learned absolute pitch before now? Because "pitch" has been treated as a single generic concept. We don't truly think of the musical scale as 12 different categories; rather, it is a single span of pitch-energy which encompasses 12 different levels. Every absolute-pitch training method that has ever existed is based on this faulty premise. All would-be teachers of absolute pitch have never done anything more than invent some clever new way to estimate the magnitude of a given level. Meyer (1899) and many others have tried outright memorization; Maryon was the first to do it by direct association with color frequencies; Brady did it by memorizing a "zero" point for reference; still others have assigned adjective-qualities to each level ("twangy", "mellow", etc). All have failed to teach absolute pitch, because each of them has, in its own way, continued to reinforce the misguided practice of "measuring" pitch level.

You don't think of colors that way. When you are shown a single blue blotch and asked "What is this?" you answer blue. It wouldn't occur to you to answer a color and then figure out which one. In your mind, the dominant identity of blue is its blueness, not its color height. Even though, in mathematical fact, blue is a more-energetic orange, to our common sense such an idea is patently absurd. Each color is a unique and separate concept. Even when we see direct evidence of the color spectrum in a rainbow gradient, we can't help but think red, orange, yellow, green, blue, and purple have been mashed together. We know each color as an independent concept. We can be intellectually convinced that blue and orange are different levels of color, and that all colors lie along a spectrum, but we still wouldn't be able to perceive blue as a "higher" orange. No normal person would ever expect to identify color categories by learning to estimate "height" of light-energy.

You don't think of phonemes that way. You don't even consciously know what their sensory characteristics are, much less how to measure them. Is "ee" higher or lower than "ah"? Who knows? Who cares? The comparison is nonsensical. Even if you learn that each of these is vowels is in fact a musical interval (which they are), and that "ee" is mathematically "higher" than "ah" (which it is), you won't be able to hear these qualities unless you consciously stop listening to them as language sounds (and even then it's still difficult to detect).

Neither colors nor phonemes are directly perceived as inputs to be measured or estimated. Instead, each sense-value directly evokes the concept it embodies. Neither colors nor phonemes, as we ordinarily perceive them, are a unified range of levels, but a collection of unique categories. The members within each collection are independently defined by their function, not their appearance. The order in which we list them is arbitrary. In other words, blue is a color because we use it as a color, not because we conceive of it as a color; we recognize blue when a certain energy of light-wave activates the concept of blueness, not because we mentally measure a waveform's "color level".

This is largely why I've finally soured on using melody associations as a strategy for absolute pitch development. Using a melody to identify a pitch can only be effective if you remember the melody at the correct pitch level. One-to-one melody association provides nothing more than yet another way to estimate the magnitude of an undifferentiated sensation. Melody association does not create separate conceptual categories for each pitch.

To train absolute pitch, the end goal is to create a distinct and unique concept for each pitch value, and to base that concept on its function, not its appearance. Each pitch value must become a symbol, not a sensation; each pitch concept must be independent of-- not defined by-- what the pitch actually sounds like. It seems like a contradiction: to learn what a pitch sounds like, you must learn to ignore what a pitch sounds like. But, like a color or a phoneme, the subjective quality of a pitch is ultimately irrelevant. What matters is what it means.

And so, here, I've described the destination; next, I take a shot at explaining the journey.

To discover the learning process, we must look to children. Although it has not yet been conclusively demonstrated that adults are incapable of learning absolute pitch, the available evidence consistently demonstrates that only children ever have. And they surely have learned it. Conceptualizing a certain swath of sound energies as twelve unrelated yet associated categories, wholly abstracted from their origin and cause, is not a natural interpretation of the world. Regardless of genetic predispositions or neural aberrations, the musical scale is a human imposition; if it is to be known at all, it must be learned. If children learn absolute pitch by a perceptual-learning mechanism, adults should be capable of learning it, as they possess the same mechanisms.

The principal trick is convincing an adult to use those mechanisms. Learning is driven by need-- people learn only when their goals require new knowledge. A child needs to know how to listen to music, which makes new learning necessary. An adult already knows how to listen to music, which makes new learning difficult. To reawaken an adult's need to learn, their existing knowledge must somehow be made to fail.

Any training must also teach the target knowledge. This would seem common sense, but it's distressingly easy to be fooled. Educational systems usually teach their students how to pass tests, which inevitably renders the supposed content generic, irrelevant, and meaningless. For example, I tried out "melody triggers" with this widget a while ago (here's a Mac version) and in a startlingly short period of time I could name notes flawlessly. Even now, I can still use this widget to name notes with perfect accuracy, at a speed rivaling or exceeding that of Miyazaki's absolute listeners (1988). But whenever the melody association falters, I can listen to a note repeatedly and be utterly unable to answer, my mind a blank. Without a melody, I haven't the slightest clue, because there are no other clues. All I learn from this process is how to associate tones with melodies; I didn't (and won't) learn to recognize the tones. All note-naming training methods to date have provided clever workarounds to help you successfully pass a naming test, but what you really learn is the workaround, not the notes' identities. An adult who wishes to learn genuine absolute pitch must dedicate themselves to a training task which cannot be accomplished except by using genuine absolute pitch skill. That task must be structured to teach absolute pitch the way it's actually learned, not through some clever pretense. To acquire full absolute pitch at any age, a musician must discover and practice a style of musicianship that not only uses perfect pitch, but will fail without it.

Again, here's what it boils down to: Like colors, the twelve tones are to be learned as separate and unrelated categorical concepts. Like phonemes, these tones are to be integrated with a linguistic comprehension of musical sound.

A category is learned by examining objects that belong to that category alone. Comparing between categories doesn't help. As this illustration showed, when you compare two "different" objects, your attention is drawn to the objects' individual characteristics, but when you compare two "same" objects, you look for common relationships. Ordinarily, we don't recognize objects by their individual characteristics, but by the invariant relationships among their shared characteristics. Therefore, to learn how to recognize an object, we need to make same comparisons. In other words, you don't learn the concept of cat by comparing cats to dogs, llamas, dump trucks, mailboxes, coffee mugs, or any of an infinite number of other objects, and making lists of characteristics that belong to each. You learn cat by synthesizing your various experiences of cats. The process of categorical learning remains the same when transferred to musical tones-- you don't learn the concept of A-flat by comparing A-flats to G's, B's, F-sharps, or any of an infinite variety of sounds, and figuring out which properties belong to each; you learn A-flat solely by synthesizing your various experiences of A-flats.

This, I'm pleased to say, is what Absolute Pitch Avenue (formerly Absolute Pitch Blaster) already does, at least to some extent. That is, Absolute Pitch Avenue focuses exclusively on within-category comparisons. I designed it with perceptual differentiation in mind, but because this is the proper first step, I'm relieved that I don't have to re-invent the process but build it further to induce categorical learning. The process should create meaningful identities for each pitch category, and allow chroma perception to arise as a matter of course. Absolute Pitch Avenue is an important first step, because it reveals the basic learning mechanism, but this mechanism needs to become the internal engine of the training's active process. If you undergo meaningful within-category training, the mechanism of perceptual differentiation should automatically make it possible to hear tone chroma; simultaneously, the nature of same comparisons should automatically create an understanding of how that chroma definitively relates to all the other characteristics of any particular sound.

This, I'm not so pleased to say. If training can automatically activate perceptual-learning mechanisms by providing meaningful experience, then the training materials need to deliver a meaningful experience. Absolute Pitch Blaster activates the perceptual-learning mechanisms, successfully, but doesn't provide the meaningful experience to complete the learning-- and this is the mind-boggling issue. How on earth are pitch categories made meaningful? It seems that answering this question may mean solving the problem.

I think of how colors are learned. Although colors are generally presented as a set, each individual color is learned by identifying objects that embody the concept. We don't say that objects look like green; they are green. Green is the grass. Green is a turtle. This basic categorical understanding is made more sophisticated by omnipresent color-codings. Children understand that objects with identical shapes may have different functions, and can recognize that the shelf for toys is green while the shelf for books is yellow.

I think of how phonemes are learned. Each individual letter is learned by identifying objects that embody the concept. Sesame Street exemplifies exactly this process, in which letters exist as various objects. C is for cookie, cupcake, car; M is for monster, meatball, monkey. A letter is not learned as a short sound broken off the front of a longer sound. Rather, a letter-category ("M") is populated with concepts, which concepts evoke their respective names ("monster", "monkey"). Once a child learns and knows that these concepts all belong to the M category, then comparing their word-names causes the common phoneme to be extracted. The word-sounds are utterly arbitrary; they could not be remembered except as evoked by their associated concepts. It is, therefore, the act of naming that creates letter-categories.

This may be how absolute pitch is learned. Each individual pitch may be learned by identifying objects that embody the concept. I took another look at Gebhardt's case study and particularly noticed the language reported by little "A.R." as his absolute skill developed. Talking about a car horn, A.R. said "it was a G", not "it produced a G." Told that a trio was playing Beethoven, he said "no, that is D-major," not "it is being played in D-major." This may be the mechanism that We Hear and Play exploits in its use of colored balls to represent each pitch. A ball is red, it is round, it is plastic; a child can learn that it is a C-pitch in the same way they learn that it is a "ball". Names are external, arbitrary associations; for each ball, a child has as much reason to learn a pitch-sound as any other name-sound, and the act of naming each ball helps create the pitch categories.

Does this describe how an adult can learn absolute pitch?

At first glance: it doesn't. The color model suggests that the best thing would be to rush out and start listening to fixed pitches in one's environment-- microwave beeps, elevator bells, ring tones, etc-- but that exact strategy has been thought of before, and if it were the solution, it would've worked by now. The phoneme model suggests that the best thing would be to associate melodies to tones, but the melody-association strategies that have been tried so far are ultimately flummoxed by adults' unconscious knowledge that pitches are "levels" of sound.

On second thought: it has to. If pitches are essentially and fundamentally like colors and phonemes, and this explanation of how colors and phonemes are learned is reasonable, then absolute pitch learned by the same processes and mechanisms would demand the same embodiments, namely, environmental objects and melody-words. Perhaps the reason these types of sounds have been considered is that they are indeed, obviously, the sounds you'd want to use-- but they've never worked to teach absolute pitch because training systems have used them inappropriately. If I'm going to develop a training system that works, I need to figure out how to use environmental pitches and melodies in a way that has never been thought of before.

In the meantime, fortunately, Absolute Pitch Avenue is still worth having out there. It does present the phoneme-word model, just with nonsense words, so while it can teach you to recognize chroma the conceptual anchors for each pitch category are weak. I mean, you could learn the sound for the D phoneme by hearing someone say D-laden babble like dobsy buddso difdum dodle tud-- this is an unconscious process called "statistical learning"-- but you would only learn how to recognize the D-sound. You wouldn't learn what D actually means. This is where Absolute Pitch Avenue is right now, which makes it more useful to skilled musicians than unskilled hobbyists, but it's the right base to start from, and Ear Training Companion upgrades will continue to be free while I figure out the rest.

The bottom line is that each pitch category needs to be populated with concepts. The principal difficulty in finding effective concepts is that adults' understanding of sound is not embodied and integral (a bell is F-sharp) but causal and dissociated (a bell produces F-sharp). This means that an adult will not naturally recognize a meaningful difference between two bells that produce different pitches, or between two identical melodies in different keys. Nonetheless, the task before me is to discover meaningful concepts and transform them into a pitch category. So rather than continue to speculate about what needs to be done, I mainly need to try to do this and get some results, one way or the other.